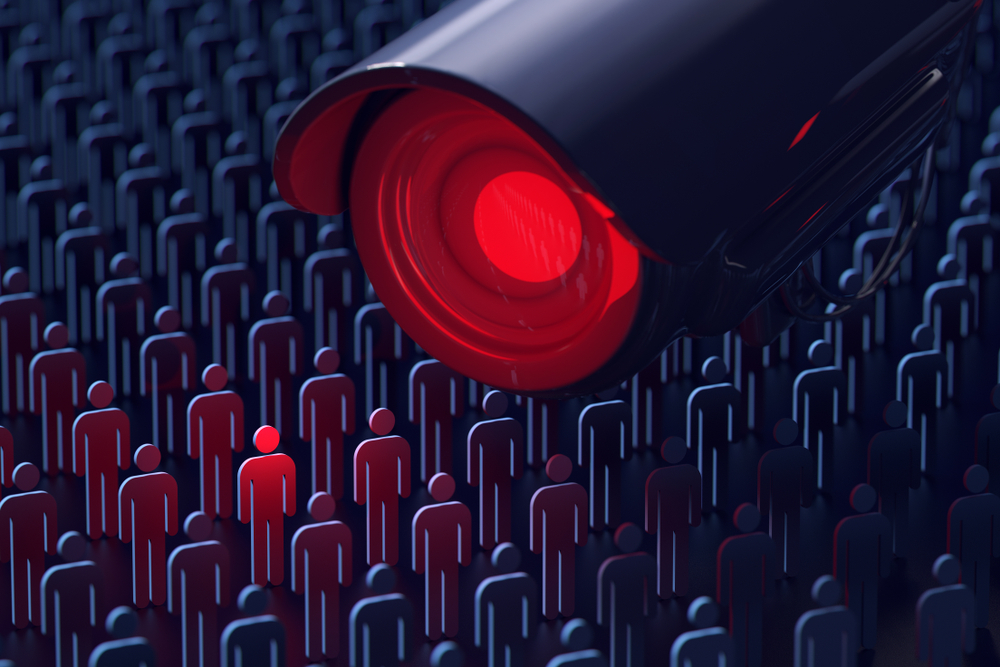

The rapid advancement of artificial intelligence (AI) and surveillance technologies has intensified global debates around privacy, human rights, and national sovereignty. As governments increasingly integrate AI into their national security apparatus, the use of these technologies across borders raises profound ethical and diplomatic concerns. Nowhere is this more evident than in the tensions between major powers such as the United States, China, and members of the European Union. These tensions stem not just from strategic competition but also from deeper disagreements over values, governance norms, and the role of technology in society. This article discusses the intersection of AI, surveillance and sovereignty, especially among major powers.

AI Surveillance and State Power

Traditional notions of sovereignty revolve around a state’s supreme authority within its territorial boundaries. However, AI-powered surveillance inherently challenges this framework. Technologies developed in one nation (e.g., Pegasus spyware from Israel, advanced facial recognition from China or the US) can be deployed globally, enabling states or non-state actors to surveil individuals and entities within another sovereign state without physical presence. This constitutes a direct violation of territorial integrity and non-interference principles.

Furthermore, AI-powered surveillance systems ranging from facial recognition and predictive policing to biometric tracking and data mining, offer unprecedented capabilities to monitor, analyze, and control populations. While governments cite national security, crime prevention, and public health as justifications for deploying these tools, it’s important to note that such systems can also be used to suppress dissent, violate privacy, and engage in discriminatory practices. For example, China has built one of the most comprehensive surveillance infrastructures in the world, integrating AI into its social credit system and public security networks. These technologies are not only employed domestically but also exported to other countries, often as part of the Belt and Road Initiative. Huawei, Hikvision and other Chinese firms have provided AI-driven surveillance equipment to dozens of nations, including some with authoritarian regimes.

There is also a threat of data colonialism, the massive datasets required to train effective AI surveillance tools are often scraped indiscriminately from the global internet, including data on citizens of other countries. This raises concerns about “data colonialism,” where powerful nations or corporations extract valuable digital resources from less powerful regions, undermining their control over citizens’ information and potentially enabling external manipulation.

Infrastructure Dominance cannot be ignored in this debate because the deployment of critical surveillance infrastructure (such as 5G networks with embedded surveillance capabilities, smart city platforms) by foreign companies has become a sovereignty issue. Fears that such infrastructure could contain backdoors for espionage or be subject to remote control by a foreign power (as seen in debates surrounding Huawei) has made nations to restrict market access, citing national security. This has transformed commercial competition into a sovereignty safeguard with Western nations, particularly the United States and its allies raising concerns about these exports, arguing that they enable digital authoritarianism and erode democratic norms globally. In response, the U.S. has imposed export restrictions and sanctions on certain Chinese tech firm. This has further exacerbated the tech rivalry between Washington and Beijing, with each side accusing the other of weaponizing technology to advance geopolitical aims.

Ethical and Human Rights Implications

Beyond espionage, ethical concerns also arise over data sovereignty—the principle that data is subject to the laws and governance of the nation where it is collected. In an era where data flows freely across borders, determining who owns, controls, and has access to sensitive information becomes highly contentious. The European Union’s General Data Protection Regulation (GDPR) reflects a robust approach to data sovereignty and privacy, setting strict guidelines for data transfers to non-EU countries. The GDPR has clashed with looser standards in the U.S. and more opaque practices in authoritarian regimes, fueling diplomatic disputes and complicating transatlantic tech cooperation.

From an ethical perspective, AI-driven surveillance challenges foundational human rights such as freedom of expression, privacy, and the presumption of innocence. Surveillance that targets individuals based on algorithmic predictions or biometric markers can lead to false positives, racial profiling, and other forms of discrimination. When such technologies are used in cross-border contexts such as in immigration control, refugee management, or international law enforcement, their implications become even more complex. For example, AI systems deployed at international borders to assess traveler risk or detect fraudulent asylum claims can inadvertently discriminate against marginalized groups. These systems often operate without transparency or accountability, making it difficult for affected individuals to challenge decisions or seek redress. In authoritarian contexts, cross-border surveillance can also be used to monitor diaspora communities or dissidents abroad, violating not only ethical norms but also international human rights law.

International organizations, including the United Nations and the OECD, have called for greater oversight and the development of global standards to govern AI and surveillance technologies. However, consensus has been elusive, as countries prioritize different values and strategic interests. While democratic nations emphasize transparency and accountability, authoritarian states focus on control and state security. This divergence hampers efforts to build a unified ethical framework and deepens mistrust among nations.

In conclusion, in a world where the cyberspace has become battlefield, addressing the ethical and diplomatic challenges of AI surveillance requires coordinated global action focused on transparency, accountability, and enforceable standards. Governments should disclose how surveillance technologies are developed and used, especially across borders, while also pursuing binding international agreements on AI ethics that protect human rights. Technology companies must be held responsible for the overseas use of their products through export controls, ethical audits, and due diligence. Public-private collaboration is essential to ensure AI tools are secure and respectful of human dignity. Additionally, civil society, academia, and watchdog organizations should play a key role in monitoring practices and shaping a more ethical global digital framework. Without these efforts, the global community may find itself in a future where technological power undermines the fundamental rights and freedoms that define open societies.

By Patricia Namakula

Director of Research and PR